Install Hadoop plug-in

The next step is to install and check the Hadoop plug-in for Eclipse.

- Download the eclipse for linux and perform tar -xvzf eclipse.tar.gz

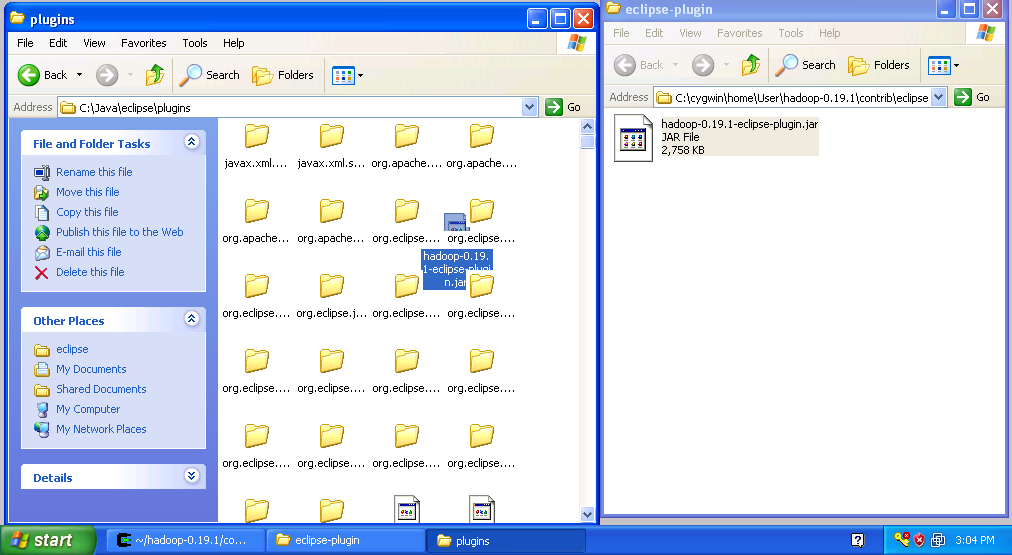

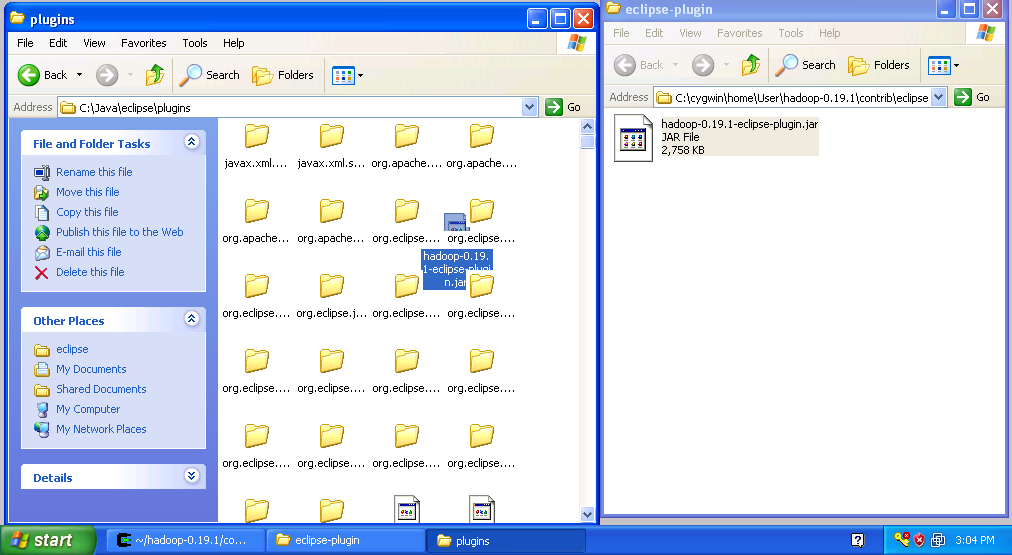

- Copy the file "hadoop-0.19.1-eclipse-plugin.jar" from the Hadoop eclipse-plugin folder to the Eclipse plugins folder as shown in the figure below.

Copy Hadoop Eclipse Plugin

- Close both windows

- Start Eclipse

- Configre jdk if it is not done by adding a line in eclipse.ini file -vm path of jdk bin

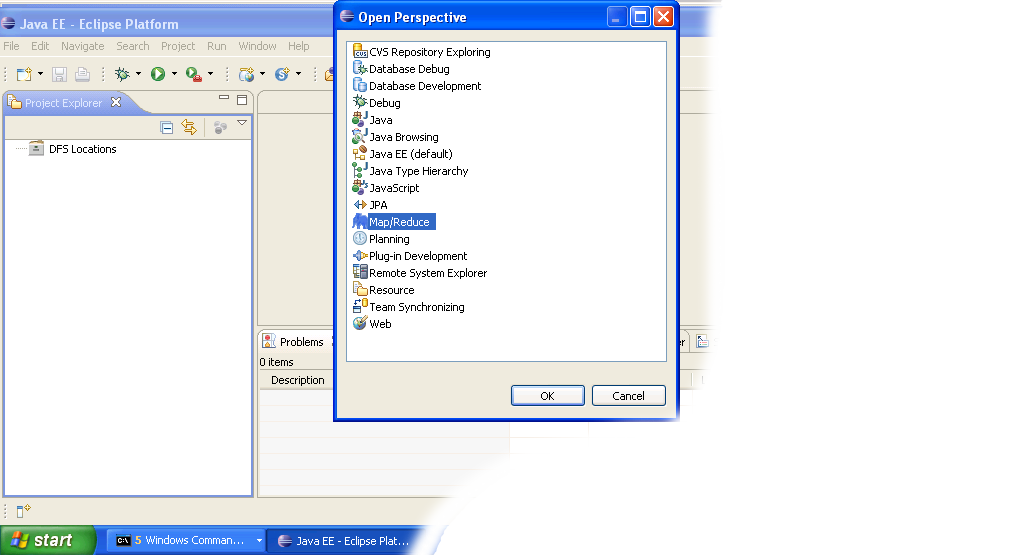

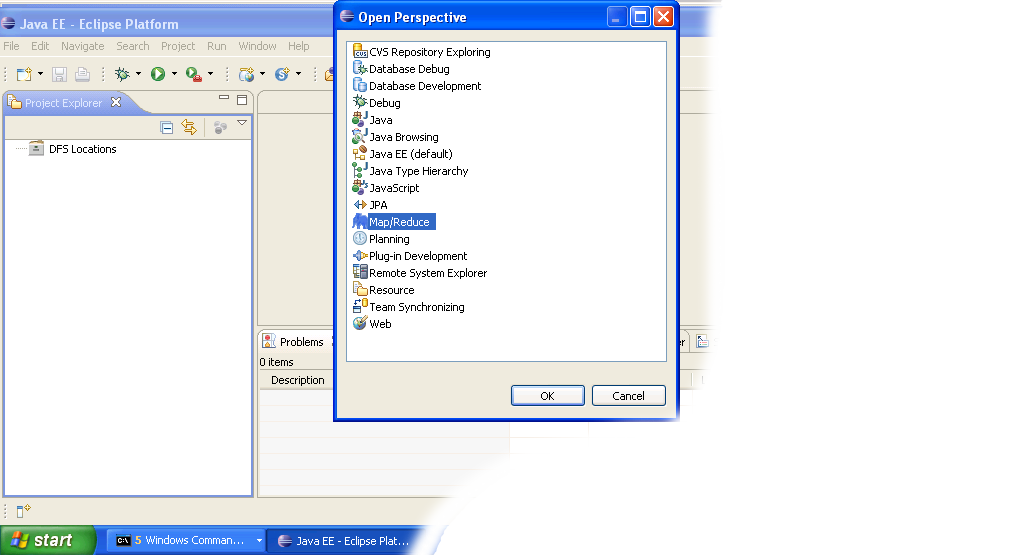

- Click on the open perspective icon

,which is usually located in the upper-right corner the eclipse application. Then select Other from the menu.

,which is usually located in the upper-right corner the eclipse application. Then select Other from the menu.

- Select Map/Reduce from the list of perspectives and press "OK" button.

- As a result your IDE should open a new perspective that looks similar to the image below.

Eclipse Map/Reduce Perspective

Now that the we installed and configured hadoop cluster and eclipse

plugin i's a time to test the setup by running a simple project.

Setup Hadoop Location in Eclipse

Next step is to configure Hadoop location in the Eclipse environment.

- Launch the Eclipse environment.

- Open Map/Reduce perspective by clicking on the open perspective icon (

), select "Other" from the menu, and then select "Map/Reduce" from the list of perspectives.

), select "Other" from the menu, and then select "Map/Reduce" from the list of perspectives.

-

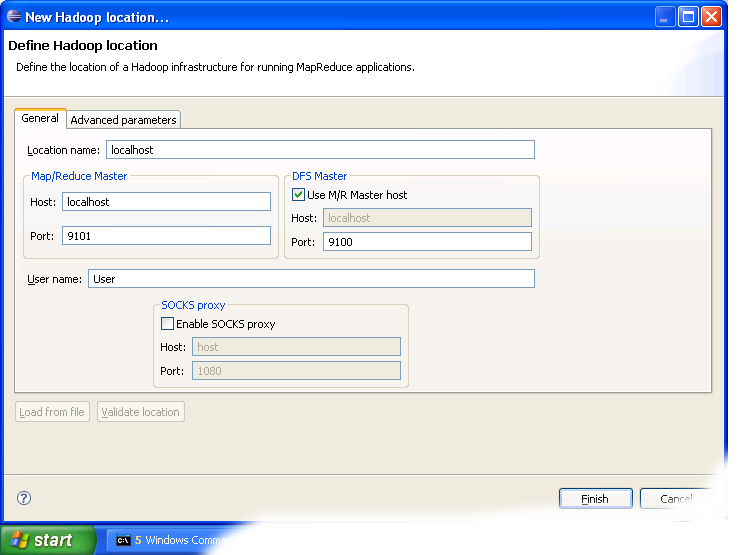

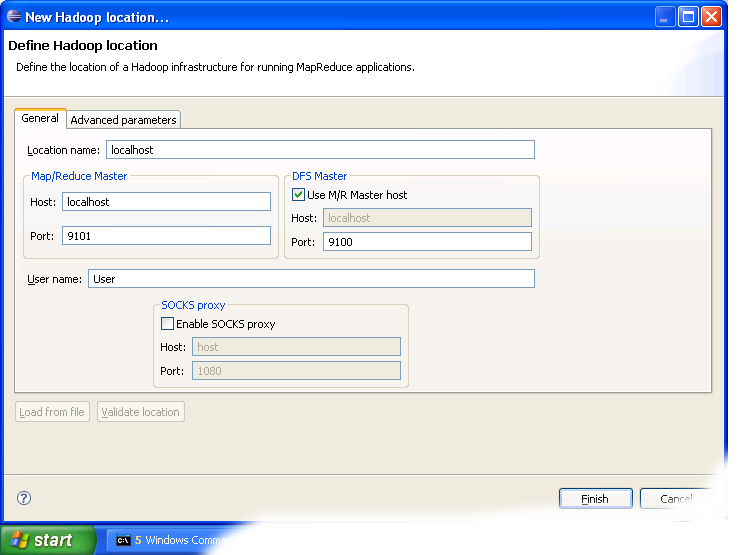

After switching to the Map/Reduce perspective, select the Map/Reduce Locations tab located at the bottom of the Eclipse environment. Then right click on the blank space in that tab and select "New Hadoop location...." from the context menu. You should see a dialog box similar to the one shown below.

Setting up new Map/Reduce location

- Fill in the following items, as shown on the figure above.

- Location Name -- localhost

- Map/Reduce Master

- Host -- localhost

- Port -- 9101

- DFS Master

- Check "Use M/R Master Host"

- Port -- 9100

- User name -- User

Then press the Finish button.

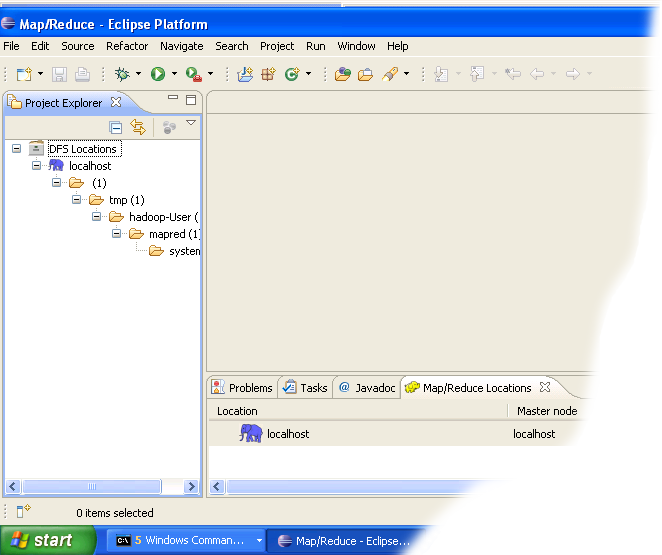

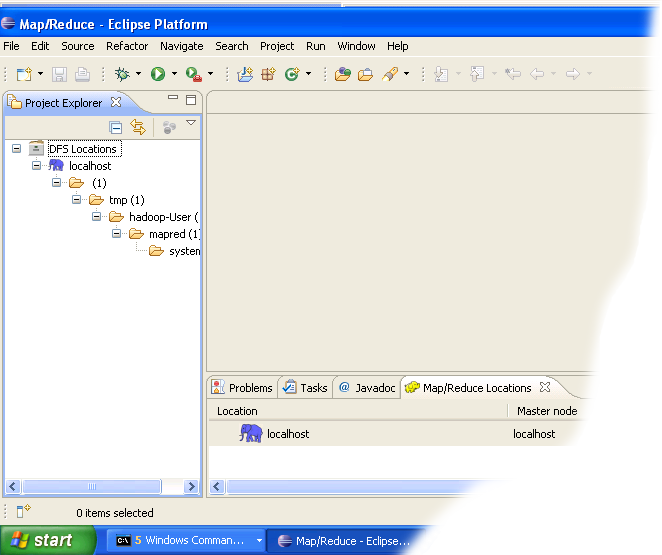

- After closing the Hadoop location settings dialog you should see a new location in the "Map/Reduce Locations" tab.

- In the Project Explorer tab on the left hand side of the Eclipse window, find the DFS Locations item. Open it using the "+" icon on its left. Inside, you should see the localhost

location reference with the blue elephant icon. Keep opening the

items below it until you see something like the image below.

Browsing HDFS location

You can now move on to the next step.

Creating and configuring Hadoop eclipse project.

- Launch Eclipse.

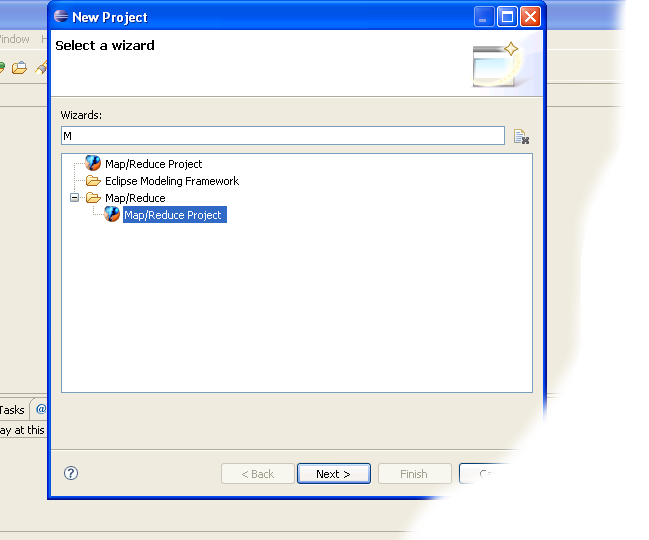

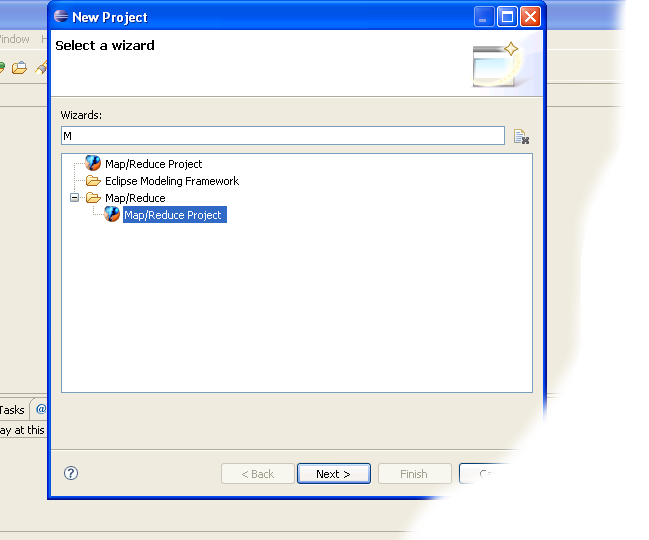

- Right-click on the blank space in the Project Explorer window and select New -> Project.. to create a new project.

- Select Map/Reduce Project from the list of project types as shown in the image below.

- Press the Next button.

- You will see the project properties window similar to the one shown below

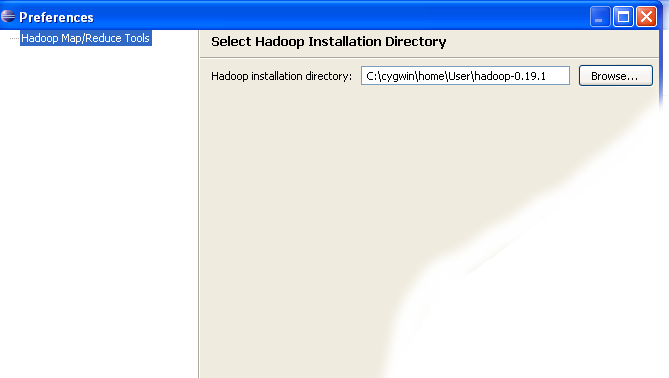

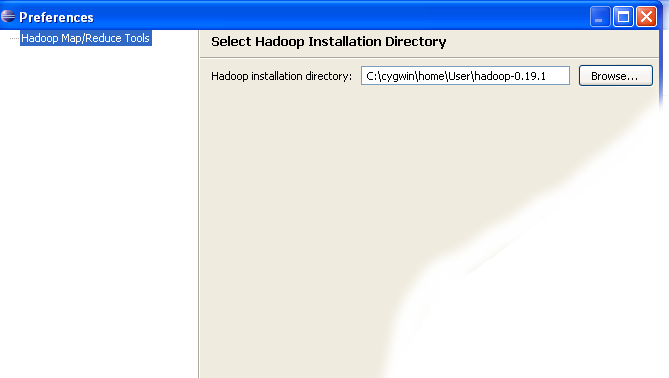

- Fill in the project name and click on Configure Hadoop Installation link on the right hand side of the project configuration window. This will bring up the project Preferences window shown in the image below.

- In the project Preferences window enter the location of the Hadoop directory in the Hadoop installation directory field as shown above.

If you are not sure of the location of the Hadoop home directory, refer to Step 1 of

this section. Hadoop home directory is one level up from the

conf directory.

- After entering the location close the Preferences window by pressing the OK button. Then close the Project window with the Finish button.

- You have now created your first Hadoop Eclipse project. You should see its name in the Project Explorer tab.

Creating Map/Reduce driver class

- Right-click on the newly created Hadoop project in the Project Explorer tab and select New -> Other from the context menu.

- Go to the Map/Reduce folder, select MapReduceDriver, then press the Next button as shown in the image below.

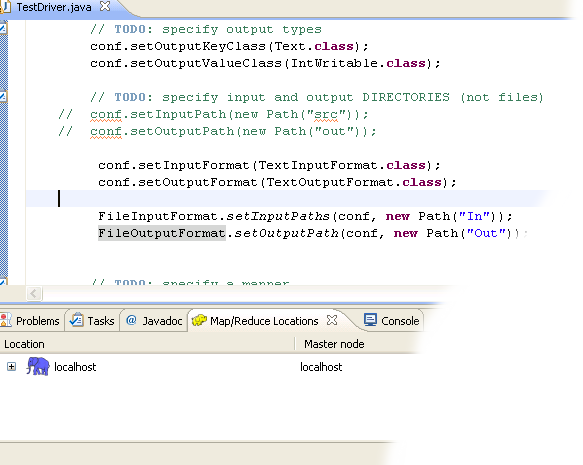

- When the MapReduce Driver wizard appears, enter TestDriver in the Name field and press the Finish button. This will create the skeleton code for the MapReduce Driver.

- Unfortunately the Hadoop plug-in for Eclipse is slightly out of

step with the recent Hadoop API, so we need to edit the driver

code a bit.

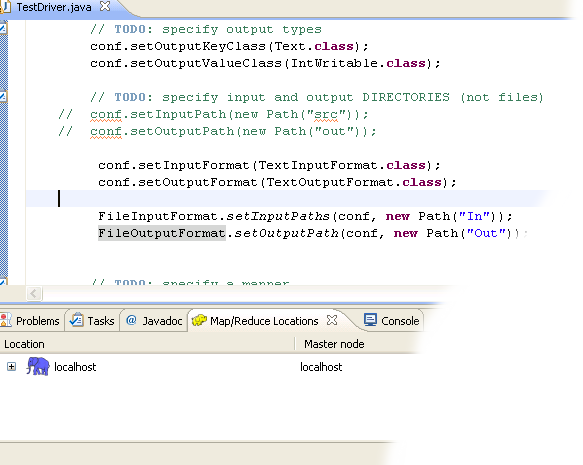

Find the following two lines in the source code and comment them out:

conf.setInputPath(new Path("src"));

conf.setOutputPath(new Path("out"));

Enter the following code immediatly after the two lines you just commented out (see image below):

conf.setInputFormat(TextInputFormat.class);

conf.setOutputFormat(TextOutputFormat.class);

FileInputFormat.setInputPaths(conf, new Path("In"));

FileOutputFormat.setOutputPath(conf, new Path("Out"));

- After you have changed the code, you will see the new lines

marked as incorrect by Eclipse. Click on the error icon for each

line and select Eclipse's suggestion to import the missing class.

You need to import the following classes: TextInputFormat, TextOutputFormat, FileInputFormat, FileOutputFormat.

- After the missing classes are imported you are ready to run the project.

Running Hadoop Project

- Right-click on the TestDriver class in the Project Explorer tab and select Run As --> Run on Hadoop. This will bring up a window like the one shown below.

- In the window shown above select "Choose existing Hadoop location" , then select localhost from the list below. After that click Finish button to start your project.

- If you see console output similar to the one shown below, Congratulations! You have started the project successfully!

,which is usually located in the upper-right corner the eclipse application. Then select Other from the menu.

,which is usually located in the upper-right corner the eclipse application. Then select Other from the menu. Eclipse Map/Reduce Perspective

Eclipse Map/Reduce Perspective ), select "Other" from the menu, and then select "Map/Reduce" from the list of perspectives.

), select "Other" from the menu, and then select "Map/Reduce" from the list of perspectives.

very nice article.Thanks for sharing the Post...!

ReplyDeleteHadoop Certification in Chennai